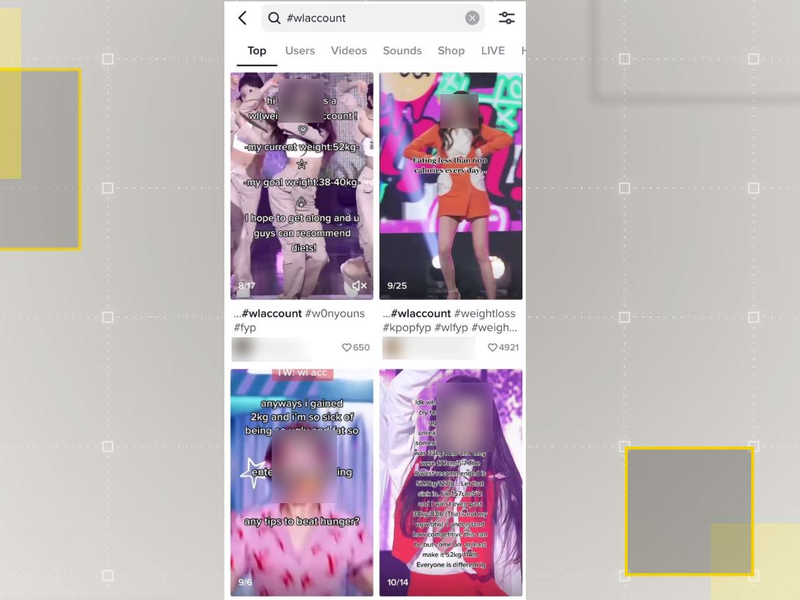

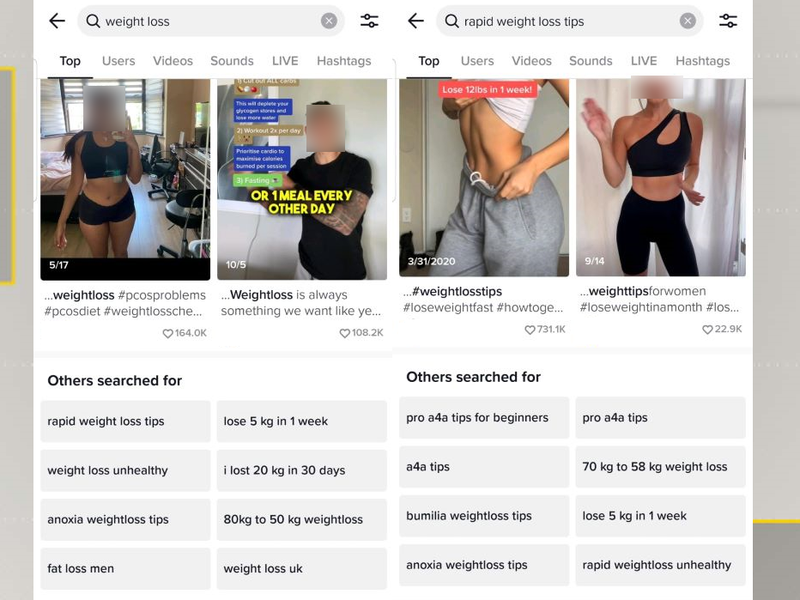

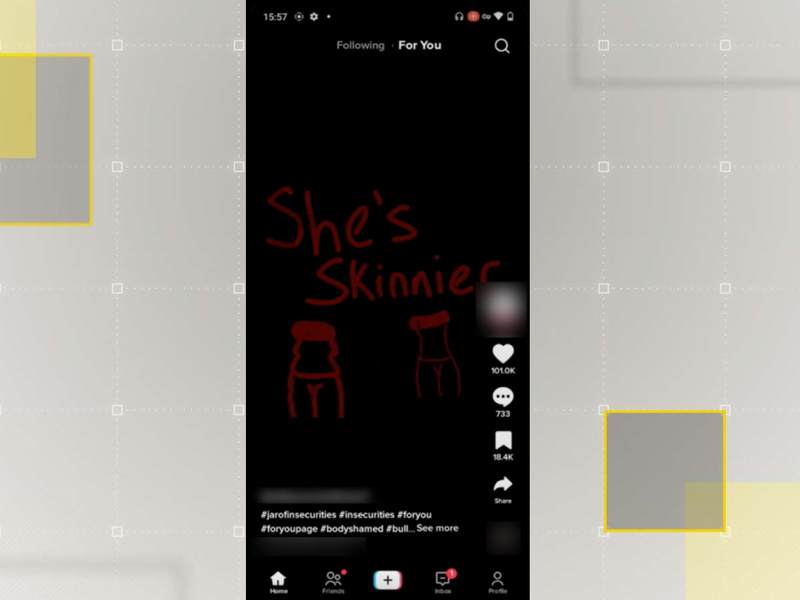

Sparking fresh concerns about the platform’s influence on children, new research has uncovered that its algorithm is pushing videos about eating disorders and self-harm to 13-year-olds.

According to research from the Centre for Countering Digital Hate (CCDH), TikTok appears to be pushing videos about eating disorders and self-harm to children as young as 13.

The study, which looked into the platform’s powerful algorithm, found accounts promoting suicide within 2.6 minutes, and others recommending explicit pro-thinness clips within eight.

Researchers discovered that the For You Pages of accounts they set up featuring characteristics of vulnerable teenagers were inundated with content of this nature twelve times more than standard accounts.

This has sparked fresh concerns about the app’s influence on impressionable users.

It comes as state and federal lawmakers in the US seek ways to crack down on TikTok over privacy and security fears, as well as determining whether or not the platform is appropriate for teens.

It also follows a series of investigations from September into TikTok’s failure to inform its 25% userbase of 10 to 19-year-olds that it had been processing their data without consent or the necessary legal grounds.

After which the company vowed to change, but the latest findings from the CCDH suggest otherwise.

‘The results are every parent’s nightmare: young people’s feeds are bombarded with harmful, harrowing content that can have a significant cumulative impact on their understanding of the world around them, and their physical and mental health,’ wrote Imran Ahmed, CEO of CCDH, in the report titled Deadly by Design.