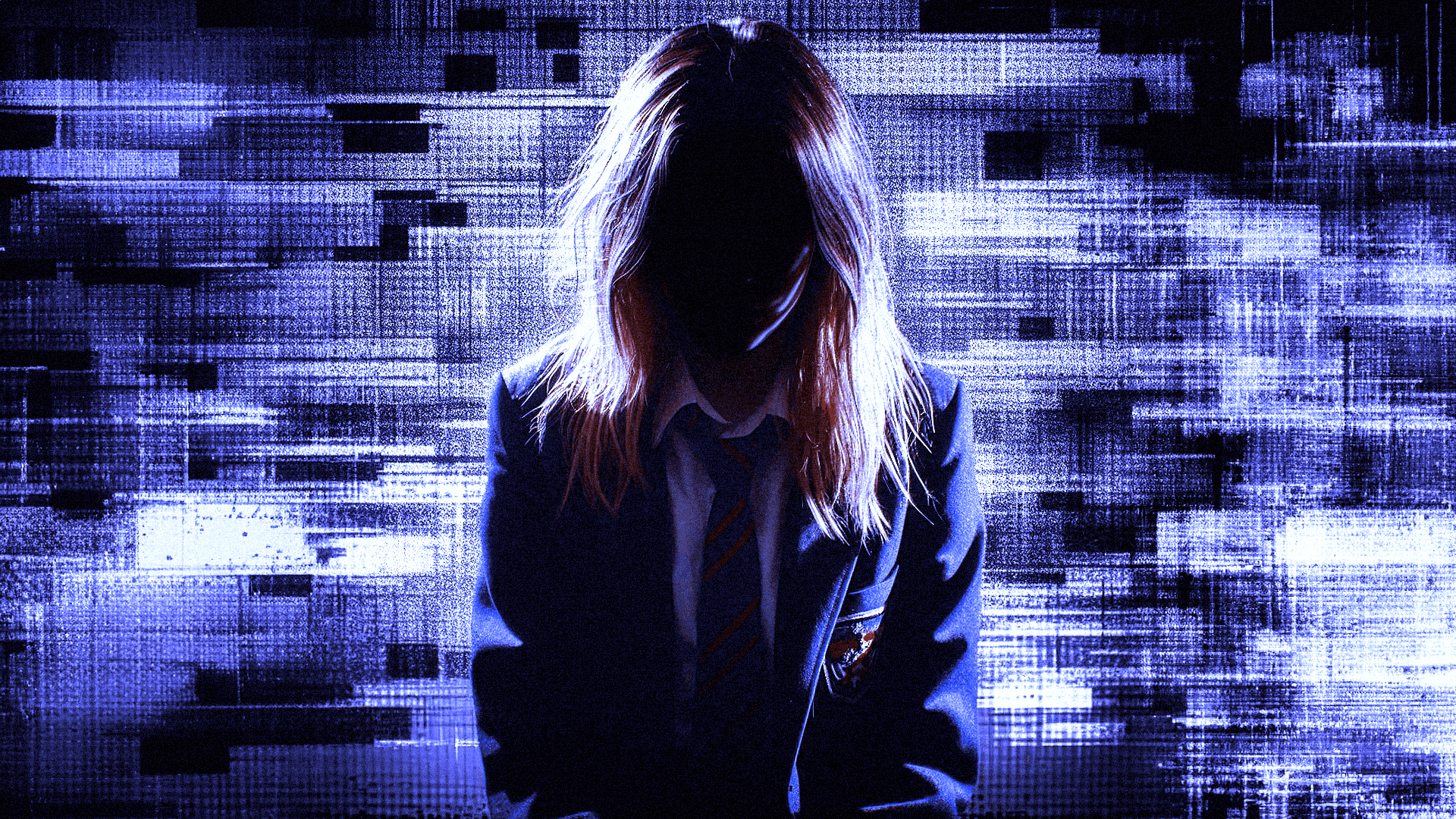

One in eight US teenagers under 18 personally know someone who has been targeted with pornographic deepfakes. What is being done about this privacy crisis?

We all know what deepfakes are at this point. Generative AI is adept at accurately capturing a person’s likeness and superimposing it over existing photos or videos. A mere text prompt and a handful of JPEGs are sufficient to do so.

The nefarious potential of the technology was discussed way back in 2019. While fret of faux propaganda manipulating world politics was exaggerated, assertions that deepfake pornography could create a privacy crisis have been vindicated. In-fact, roughly 98% of all deepfake content today falls under this bleak category.

The latest report from Thorn, a company focused on child safety solutions, underscores the scope of the problem. The salient statistic is that one in eight US teens (under 18) claim to personally know someone who has been victimised by deepfake pornography.

This data was obtained through an online survey of 1,200 people aged between 13 and 20 back in October 2024, including 700-plus teenagers between 13 and 17. Of the respondents, 48% identified as male, 48% as female, and four percent as gender minority.

As many as one in 17 said they had fallen victim to deepfake images or videos themselves. Given the average class size in US public schools is around 18 students, that means at least one person per classroom has been directly affected.

Previous research from the same organisation found that one in 10 minors (aged 9 to 17) had heard of instances where their peers used AI to generate porn of other students. Unsurprisingly, girls were targeted in the overwhelming majority of cases across both papers.