Netflix has come under fire for including undisclosed AI imagery in a new true crime documentary. The backlash has sparked important conversations around media manipulation.

Like it, fear it, or loathe it, AI is now a part of our everyday lives. From education to entertainment, artificial intelligence is only set to keep growing, shaping nearly every aspect of our interaction with the media.

But these changes call for boundaries. Both AI’s unpredictability and unprecedented growth make it a dangerous tool if not used delicately. And in recent days, two huge media companies have come under fire for failing to do just that.

Just days after studio A24 faced criticism for using AI posters to promote the new film ‘Civil War’, Netflix has started a heated debate about the use of artificial intelligence in TV and film, especially when viewers aren’t made aware of it.

Netflix has used AI-generated images in a new true crime doc ‘WHAT JENNIFER DID’ to present Jennifer Pan as happy & confident before she was convicted of murder.

Use of AI tools is not disclosed in the credits.

(Source: https://t.co/MYN1n1WTF0) pic.twitter.com/DdV5zea1oh

— DiscussingFilm (@DiscussingFilm) April 18, 2024

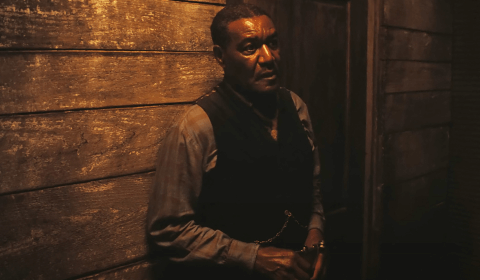

The streaming service was found to have used AI imagery of convicted murderer Jennifer Han, as part of their new true crime documentary ‘What Jennifer Did.’ The film follows police as they investigate the brutal killing of Han’s mother and the attempted murder of her father, initially believing Jennifer to be an innocent witness.

She eventually confessed to orchestrating a hire-to-kill on her parents in an effort to inherit their money.

Around 28 minutes into the film, Pan’s high school friend, Nam Nguyen, describes her as ‘great to talk to.’

‘Jennifer, you know, was bubbly, happy, confident, and very genuine,’ he explains, as three images of Pan appear on screen.

The photos have since come under scrutiny, as objects in the background appear distorted, as well as details in Jennifer’s hands, ears, and hair.

Sorry Netflix, a true crime documentary is not the place for AI imagery https://t.co/ER97eygQpe pic.twitter.com/zvXE0Cdqv2

— Computer Arts (@ComputerArts) April 18, 2024