The social media giant is cracking down on videos that promote starvation and anorexia following reports that potentially harmful pro-weight-loss accounts are still available in search results.

It’s a day of reckoning for Gen Z’s app du jour. TikTok, the viral video-sharing platform wildly popular among teens, has announced plans to investigate content promoting starvation and encourages eating disorders.

In light of recent findings by The Guardian that harmful pro-weight-loss accounts remain easily searchable despite previous measures taken by the company to prohibit corresponding advertising, it’s a crucial moment for the app, particularly given that 60% of its users are between the ages of 16 and 24. For an obviously impressionable demographic, videos of this nature can be incredibly damaging, especially in the aftermath of lockdown during which challenging relationships with food and diet were exacerbated tenfold.

Partly to blame is TikTok’s concerningly specific algorithm which, though commendable for its uniqueness to each and every one of us endlessly scrolling down the For You Page (a feature that significantly sets it apart from its competitors), can be dangerous for users with a tendency to watch triggering content. Rather than actively diverting them away from this, TikTok automatically promotes a slew of similar videos instead, making it virtually impossible to avoid encountering unhealthy themes in the – often excessive – time spent trawling through the addictive FYP.

https://twitter.com/yasmeenie/status/1295816991609991168?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1295816991609991168%7Ctwgr%5E%7Ctwcon%5Es1_&ref_url=https%3A%2F%2Fjezebel.com%2Fajax%2Finset%2Fiframe%3Fid%3Dtwitter-1295816991609991168autosize%3D1

‘It takes little more than 30 seconds to find a pro-eating disorder account on TikTok and, once a user is following the right people, their For You page will quickly be flooded with content from similar users,’ says Ysabel Gerrard, a lecturer in digital media and society at the University of Sheffield. ‘This is because TikTok is essentially designed to show you what it thinks you want to see.’

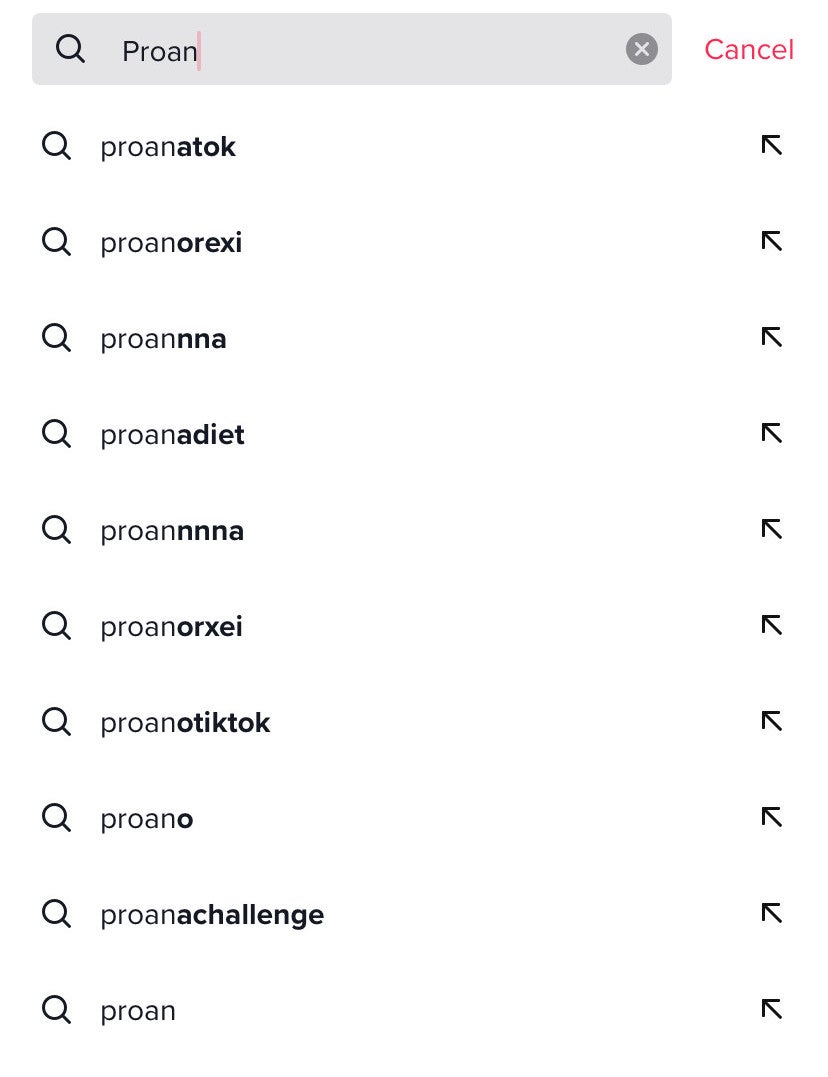

However, it seems as though the larger issue lies in the company’s apparent inability to stop its users from getting around restrictions by using deliberate variants of well-known terms or slight misspellings. While TikTok has been working hard to block hashtags such as ‘proana’ and ‘thinspo,’ typing ‘proanotok’ and ‘thinspooo’ into the search bar will immediately yield results, bypassing the censors far too effortlessly.

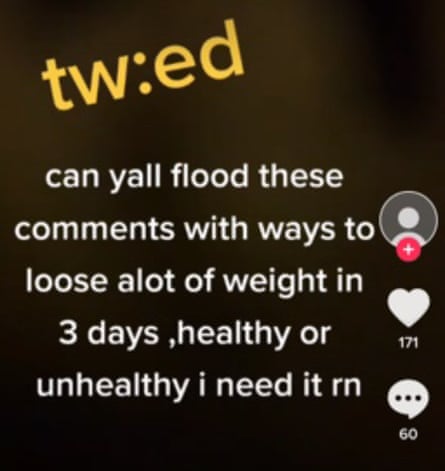

Of the numerous accounts that appear, one shows messages from a girl asking for tips on how to lose a lot of weight quickly ‘in a healthy or unhealthy way,’ another is rife with suggestions of ‘low calorie safe foods for when you don’t want to purge,’ and some even go as far as warning ‘if you don’t like stuff about starving leave please’ directly in their bios. And, besides the usual before and afters and calorie counting videos, TikTok is equally riddled with content injecting the same dark, ‘meme-centric’ humour frequently found on the likes of Twitter, where self-deprecating jokes are commonplace.

‘As soon as this issue was brought to our attention, we took action banning the accounts and removing the content that violated those guidelines, as well as banning particular search terms,’ said a spokesperson. ‘As content changes, we continue to work with expert partners, update our technology and review our processes to ensure we can respond to emerging and new harmful activities.’