The sweeping regulation arrives amid notable public mistrust of government and fears surrounding data privacy.

You’ve probably noticed in one way or another that the UK’s Online Safety Act is now in full effect. I, uh, read as much on July 24th.

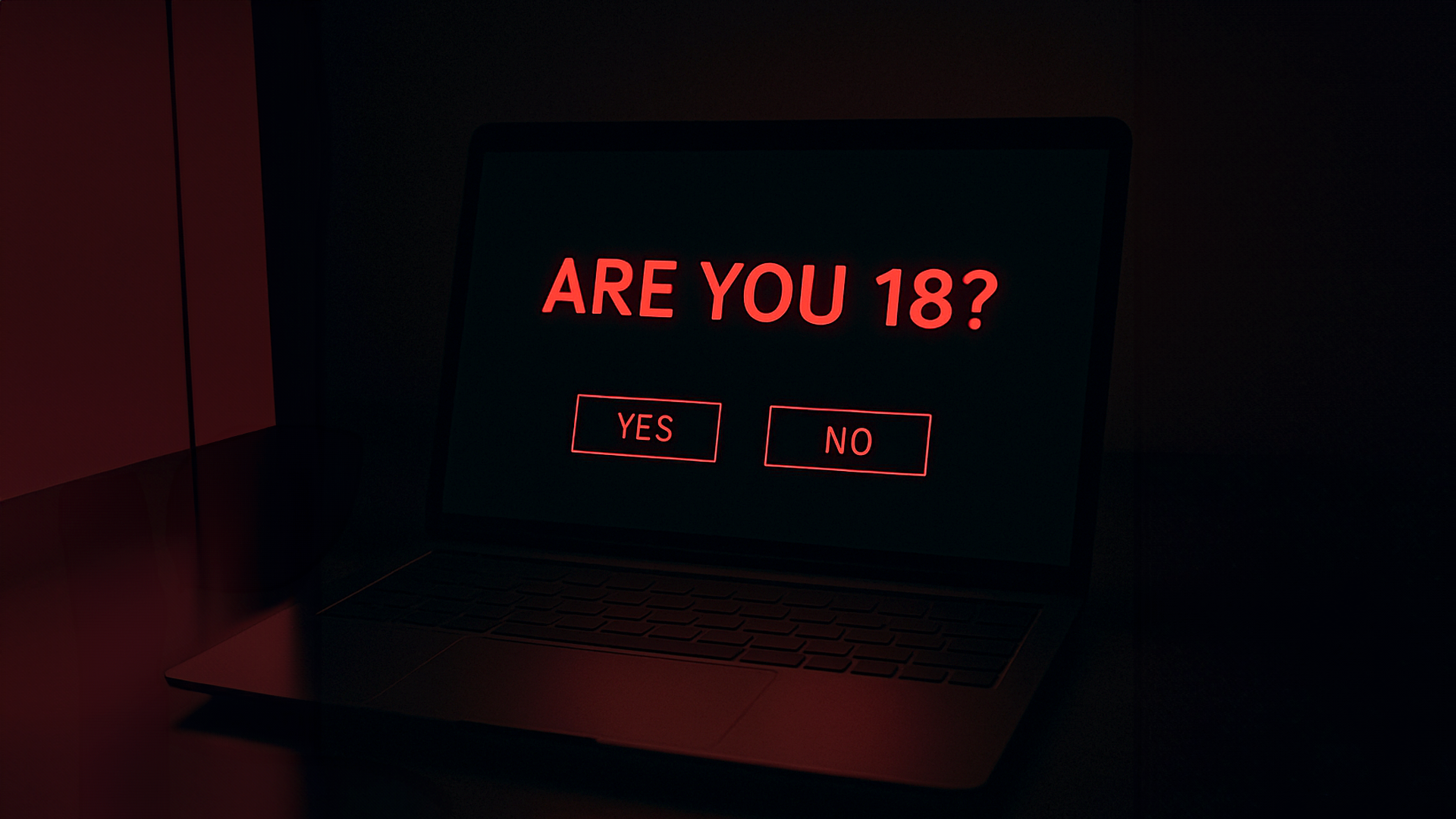

If you attempt to load up porn websites, certain Reddit pages, or content that runs afoul of ‘child friendly’ on TikTok, Instagram, YouTube, or Snapchat, you’ll be stopped in your tracks by a slew of confusing age verification checks.

There is no uniform way in which to prove your age, with some platforms requesting verification through third party apps, while others have developed creepy AI face tech that estimates your age. The former method, fronted by providers like AgeChecked and VerifyMyAge, is currently most widespread, requiring proof of age in the form of a passport or licence scan, or through connecting to your online banking, among other methods.

The upside of the bill is that children are now more adequately protected on social media, with the platforms now directly responsible – and liable – for content that is posted. If someone underage sees and reports something illicit, the onus is put on the platform to remove it or face potential fines. This includes graphic violence, pornography, or anything encouraging harmful behaviours.

Quite honestly, though, it feels as though the bill should have ended there.

Instead, the legislation spills over into murkier territory, handing sweeping powers to Ofcom and, some would argue, empowering a culture of surveillance under the guise of protection. Even if the Online Safety Act began with noble intentions, it’s now giving the distinct whiff of overreach.