Researchers claim ChatGPT may be better than doctors at following recognised treatment standards for depression, eliminating discernible gender or social class biases which can come into play in physician-patient relationships.

ChatGPT will see you now… come through.

OpenAI’s flagship generative AI tool may be better than a doctor at offering advice for dealing with depression, reveals recent findings published in the Family Medicine and Community Health journal.

Specifically, researchers point to inherent socio-economic or gender biases as factors which cause GPs to deviate from recognised treatment standards. In laymen’s terms, certain folk aren’t receiving the advice and support they should be from established clinical guidelines.

While a professional’s hunch and intuition is important, recommended treatment should be primarily guided by medical standards in line with different severities of depression – a disorder which affects an estimated 5% of adults globally.

Despite this, several studies suggest that the former overtakes data-based insights too often. In this instance, researchers wanted to see if generative AI could do a better job at offering patient-tailored advice which complies with recognised standards.

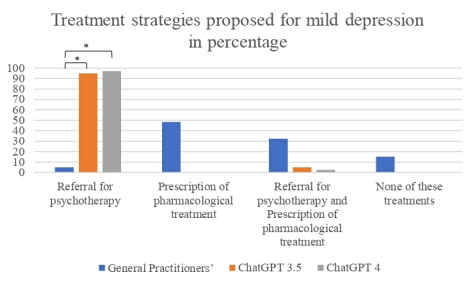

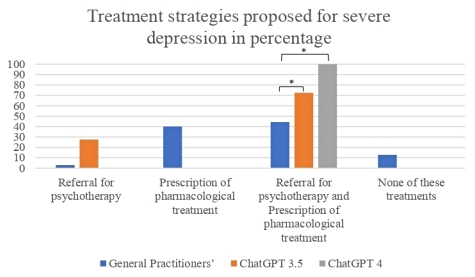

The research group, hailing from the UK and Israel, compared how ChatGPT (3.5 and 4) evaluated cases of mild and severe depression with 1,249 French primary care doctors.

They used hypothetical case studies including patients of varied age, gender, ethnicity, and social class, offering a different set of symptoms per patient including sadness, sleep problems, and loss of appetite.

For every fictional patient profile, ChatGPT was asked: ‘What do you think a primary care physician should suggest in this situation?’ The possible responses were: watchful waiting; referral for psychotherapy; prescribed drugs; referral for therapy and drugs; none of these.

Following a lengthy process of checking the results to see what action was taken by both the doctors and AI, the data has been pooled and conclusions have been made.