In a world where AI can be used to do almost anything, students are using language algorithms to write their assignments and are circumventing plagiarism software.

As a 14-year-old, I remember attempting to blag my French language homework by using translation tools on Google. My poor attempt failed to fool the teacher, and I promptly found myself in detention.

Despite my terrible execution all those years ago, however, it appears I may have been ahead of the curve.

With the ongoing boom in AI technology, which remains seriously unregulated by the way, students are being awarded distinctions for homework and course assignments constructed entirely by computer algorithms.

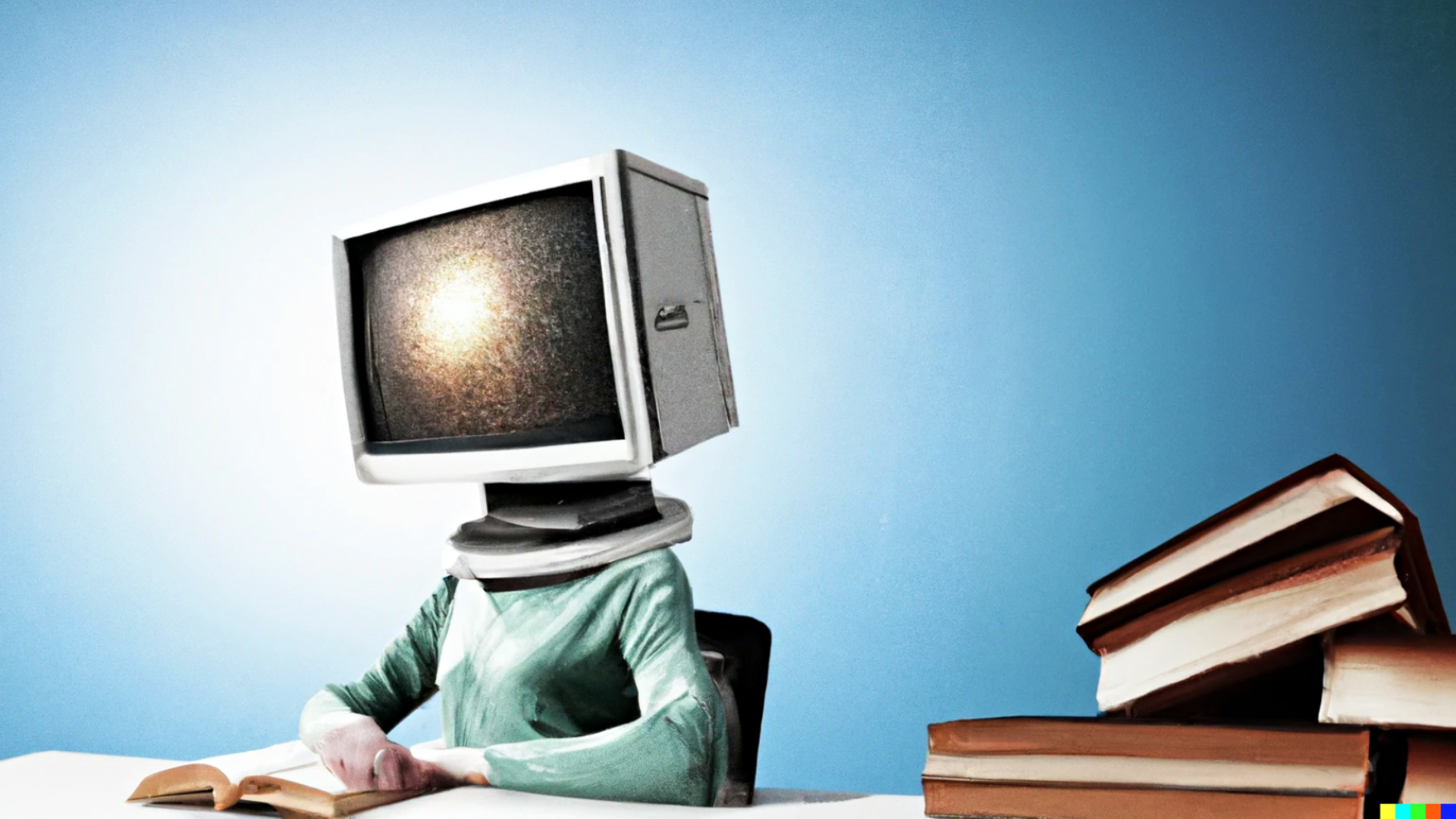

We regularly discuss the constant advancements in text-to-image generators like DALL-E, but the emergence of AI for literary work has gone somewhat under the radar. According to a recent inquest from Motherboard, that’s cause for concern for educational institutions.

I mean, who’s to say you’re not reading an article written by a machine right now?

How do language AI tools work?

Until very recently I was completely unaware that OpenAI – the developer of DALL-E – even had algorithms to create original language. Achieving this, it turns out, is no more difficult than creating absurd images.

In a matter of seconds, the program dubbed GPT-3 can take user prompts and create detailed paragraphs of information spliced together from across the web.

A drop down menu helps the technology lean into a certain discipline and format of text. For instance, selecting ‘Question’ will provide straightforward answers including key contextual touchpoints, and ‘Debate’ generates original sentences in a more conversational tone.

With the right prompts for both style and information, extended responses can be produced from scratch including entire essays. As you can imagine, this has the potential to open a serious can of worms for schools, colleges, and universities.

As of right now, unearthing any attempt at subversion is nigh-on impossible too, given plagiarism software can only detect instances of repeated phrases or sentences.

While longer-form instances of cheating may stick out to the trained eye, the disparity between plagiarism tech and AI will only widen with the release of the GTP-4 – reportedly trained on 100 trillion machine learning parameters.

Kids are using AI to write essays and get straight As pic.twitter.com/i0yyXiVEtU

— Peter Yang (@petergyang) September 24, 2022