In scary/cool science news of the day, US scientists have taken a significant step forward in creating computers that communicate directly with our brains.

Neuroscientists and speech experts at the University of California San Francisco (UCSF) have developed an artificial intelligence that’s able to read human brainwaves and convert them to speech.

The breakthrough, first reported in Nature magazine, has implications both for people who have conditions that have cost them the power of speech (M.S. or stroke sufferers, for example), and for the future robot apocalypse. They can literally read our thoughts now guys is it time to be concerned?

All jokes aside, this tech is completely ground-breaking for the seamless integration of machines into human mechanisms, potentially reducing the need for user input in programming. Finally, MS Paint will understand that what you managed to create IRL wasn’t actually what was in your head.

Brainwave to speech intelligence has made rapid progress in the past ten years, having stalled in the past at translating raw brain data into words. The complexity of neurological pathways, and the individuality of each persons brain pattern, meant that it was generally only possible to generate one word at a time.

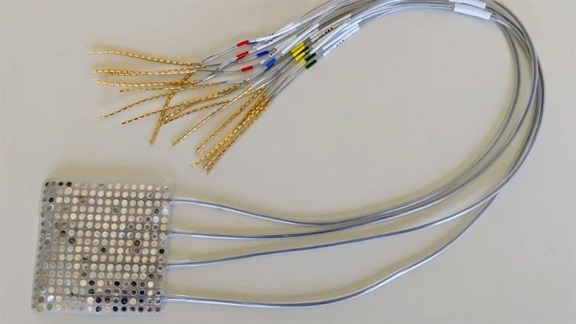

However, whilst people’s thought patterns are unique and infinitely complex, the signals from the brain to the mouth, jaw, and tongue that produce speech are fairly universal. Hence, rather than studying only the brain, the UCSF scientists used two AIs to analyse both neurological signals and the movements of the mouth during dialogue.

The video below shows you an x-ray view of what our mouth and throat actually look like when we talk.

When the information collected by these AI’s was fed into a synthesiser, something that more or less resembled complex sentences came out.